[TensorRT] Squeeze + Unsqueeze + expand_as 조합의 Pytorch 모델 사용 시 나타날 수 있는 이슈

환경

Pytorch 1.4.0

TensorRT 7.1.3.4

CUDA 10.2

cuDNN 8.0.0

본 포스팅은,

Pytorch 모델 forward 일부 구현에서 squeeze + transpose + unsqueeze + expand_as 조합을 사용하여

Pytorch - ONNX - TensorRT 변환을 수행하였을 때 발생할 수 있는 이슈에 대하여 작성한 글이다.

결과적으로 위와 같은 조합을 이용하여 TensorRT 변환 과정에서 -1 이라는 dynamic 한 변수가 중간에 등장하여 변환 결과가 뒤틀리는 현상이 발생하는 것 같다. 이러한 결과는 (Pytorch 결과 == Onnx 결과) != TensorRT 결과 라는 결론을 짓게 된다. 이는 Output Node 가 여러 개 일 때 극명하게 드러날 수 있는 부분이며(output order shuffle), 내부적으로 TensorRT 로 변환하는 과정에서 노드들간 결합 및 분해? 됨에 있어서 중간에 결과가 뒤틀리는 현상이 발생하는 것 같다.

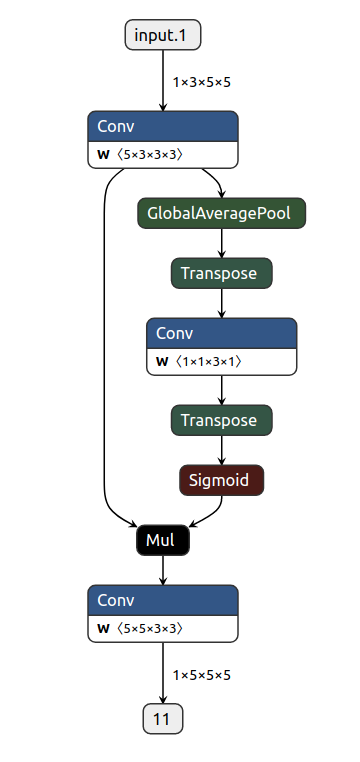

위 조합으로 모델을 생성할 시 ONNX 변환하여 Visualization 한 결과는 다음과 같다.

위와 같이 구성된 모델의 ONNX 그래프 노드들은 다음과 같다.

graph(%input.1 : Float(1, 3, 5, 5),

%eca.conv.weight : Float(1, 1, 3),

%m1.weight : Float(5, 3, 3, 3),

%m2.weight : Float(5, 5, 3, 3)):

%4 : Float(1, 5, 5, 5) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 3], pads=[1, 1, 1, 1], strides=[1, 1]](%input.1, %m1.weight) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/conv.py:342:0

%5 : Float(1, 5, 1, 1) = onnx::GlobalAveragePool(%4) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/functional.py:768:0

%6 : Float(1, 5, 1) = onnx::Squeeze[axes=[-1]](%5) # /home/seohee/Project/convert/mini_test/mini_model.py:437:0

%7 : Float(1, 1, 5) = onnx::Transpose[perm=[0, 2, 1]](%6) # /home/seohee/Project/convert/mini_test/mini_model.py:437:0

%8 : Float(1, 1, 5) = onnx::Conv[dilations=[1], group=1, kernel_shape=[3], pads=[1, 1], strides=[1]](%7, %eca.conv.weight) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/conv.py:202:0

%9 : Float(1, 5, 1) = onnx::Transpose[perm=[0, 2, 1]](%8) # /home/seohee/Project/convert/mini_test/mini_model.py:437:0

%10 : Float(1, 5, 1, 1) = onnx::Unsqueeze[axes=[-1]](%9) # /home/seohee/Project/convert/mini_test/mini_model.py:437:0

%11 : Float(1, 5, 1, 1) = onnx::Sigmoid(%10) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/activation.py:271:0

%12 : Tensor = onnx::Shape(%4)

%13 : Float(1, 5, 5, 5) = onnx::Expand(%11, %12) # /home/seohee/Project/convert/mini_test/mini_model.py:439:0

%14 : Float(1, 5, 5, 5) = onnx::Mul(%4, %13) # /home/seohee/Project/convert/mini_test/mini_model.py:439:0

%15 : Float(1, 5, 5, 5) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 3], pads=[1, 1, 1, 1], strides=[1, 1]](%14, %m2.weight) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/conv.py:342:0

return (%15)

위와 같은 모델이 TensorRT 로 변환되는 과정에서 -1 이라는 dynamic 한 변수가 발동되는 시점은 아래와 같다.

노드들을 찬찬히 살펴보면 알 수 있다.

...

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Squeeze]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 5

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Squeeze] inputs: [5 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: onnx2trt_utils.cpp:1641: Original shape: (1, 5, 1, 1), squeezing to: (1, 5, 1)

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 2) [Shuffle] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 6 for ONNX tensor: 6

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Squeeze] outputs: [6 -> (1, 5, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Transpose]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 6

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Transpose] inputs: [6 -> (1, 5, 1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 3) [Shuffle] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 7 for ONNX tensor: 7

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Transpose] outputs: [7 -> (1, 1, 5)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Conv]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 7

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: eca.conv.weight

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Conv] inputs: [7 -> (1, 1, 5)], [eca.conv.weight -> (1, 1, 3)],

[TensorRT] VERBOSE: builtin_op_importers.cpp:450: Convolution input dimensions: (1, 1, 5)

[TensorRT] VERBOSE: onnx2trt_utils.cpp:1793: Original shape: (1, 1, 5), unsqueezing to: (1, 1, 5, 1)

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 5) [Convolution] for ONNX node:

[TensorRT] VERBOSE: onnx2trt_utils.cpp:1641: Original shape: (1, 1, 5, 1), squeezing to: (1, 1, 5)

[TensorRT] VERBOSE: builtin_op_importers.cpp:533: Using kernel: (3, 1), strides: (1, 1), prepadding: (1, 0), postpadding: (1, 0), dilations: (1, 1), numOutputs: 1

[TensorRT] VERBOSE: builtin_op_importers.cpp:534: Convolution output dimensions: (1, 1, 5, 1)

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 8 for ONNX tensor: 8

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Conv] outputs: [8 -> (1, 1, 5)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Transpose]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 8

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Transpose] inputs: [8 -> (1, 1, 5)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 7) [Shuffle] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 9 for ONNX tensor: 9

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Transpose] outputs: [9 -> (1, 5, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Unsqueeze]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 9

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Unsqueeze] inputs: [9 -> (1, 5, 1)],

[TensorRT] VERBOSE: onnx2trt_utils.cpp:1793: Original shape: (1, 5, 1), unsqueezing to: (1, 5, 1, 1)

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 8) [Shuffle] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 10 for ONNX tensor: 10

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Unsqueeze] outputs: [10 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Sigmoid]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 10

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Sigmoid] inputs: [10 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 9) [Activation] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 11 for ONNX tensor: 11

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Sigmoid] outputs: [11 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Shape]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 4

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Shape] inputs: [4 -> (1, 5, 5, 5)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 10) [Shape] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 12 for ONNX tensor: 12

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Shape] outputs: [12 -> (4)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Expand]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 11

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 12

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Expand] inputs: [11 -> (1, 5, 1, 1)], [12 -> (4)],

[TensorRT] WARNING: onnx2trt_utils.cpp:220: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 17) [Slice] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 13 for ONNX tensor: 13

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Expand] outputs: [13 -> (-1, -1, -1, -1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Mul]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 4

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 13

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Mul] inputs: [4 -> (1, 5, 5, 5)], [13 -> (-1, -1, -1, -1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 18) [ElementWise] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 14 for ONNX tensor: 14

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Mul] outputs: [14 -> (-1, 5, 5, 5)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Conv]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 14

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: m2.weight

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Conv] inputs: [14 -> (-1, 5, 5, 5)], [m2.weight -> (5, 5, 3, 3)],

[TensorRT] VERBOSE: builtin_op_importers.cpp:450: Convolution input dimensions: (-1, 5, 5, 5)

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 19) [Convolution] for ONNX node:

[TensorRT] VERBOSE: builtin_op_importers.cpp:533: Using kernel: (3, 3), strides: (1, 1), prepadding: (1, 1), postpadding: (1, 1), dilations: (1, 1), numOutputs: 5

[TensorRT] VERBOSE: builtin_op_importers.cpp:534: Convolution output dimensions: (-1, 5, 5, 5)

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 15_1 for ONNX tensor: 15

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Conv] outputs: [15 -> (-1, 5, 5, 5)],

[TensorRT] VERBOSE: ModelImporter.cpp:507: Marking 15_1 as output: 15

...

생성된 TensorRT engine 파일을 Debugging 해보면 아래와 같이 Output Shape 결과에서 -1 이라는 변수가 발생했음을 확인 할 수 있다.

2020-09-16 14:01:02 - __main__ - DEBUG - === Network Description ===

2020-09-16 14:01:02 - __main__ - DEBUG - Input 0 | Name: input.1 | Shape: (1, 3, 5, 5)

2020-09-16 14:01:02 - __main__ - DEBUG - Output 0 | Name: 15 | Shape: (-1, 5, 5, 5)

이를 다른 방식으로 구현하여 ONNX 모델 Visualization 한 결과는 아래와 같다.

다른 방식으로 구현이라 함은, squeeze > ??? > unsqueeze 함으로써 발생할 수 있는 명시적이지 않은 shape (e.g. -1) 에 대한 여지를 없애주기 위해서 shape 을 줄이거나, 늘리는 등의 가변적인 요소를 주지 않게끔 명시적으로 노드들이 이어질 수 있게 구현해야 한다.

* 도움을 주신 박박사님께 감사를 드리며 (- -)(_ _)

ONNX 모델 구조는 다음과 같다.

graph(%input.1 : Float(1, 3, 5, 5),

%eca.conv.weight : Float(1, 1, 3, 1),

%m1.weight : Float(5, 3, 3, 3),

%m2.weight : Float(5, 5, 3, 3)):

%4 : Float(1, 5, 5, 5) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 3], pads=[1, 1, 1, 1], strides=[1, 1]](%input.1, %m1.weight) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/conv.py:342:0

%5 : Float(1, 5, 1, 1) = onnx::GlobalAveragePool(%4) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/functional.py:768:0

%6 : Float(1, 1, 5, 1) = onnx::Transpose[perm=[0, 2, 1, 3]](%5) # /home/seohee/Project/convert/mini_test/mini_model.py:475:0

%7 : Float(1, 1, 5, 1) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 1], pads=[1, 0, 1, 0], strides=[1, 1]](%6, %eca.conv.weight) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/conv.py:342:0

%8 : Float(1, 5, 1, 1) = onnx::Transpose[perm=[0, 2, 1, 3]](%7) # /home/seohee/Project/convert/mini_test/mini_model.py:475:0

%9 : Float(1, 5, 1, 1) = onnx::Sigmoid(%8) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/activation.py:271:0

%10 : Float(1, 5, 5, 5) = onnx::Mul(%4, %9) # /home/seohee/Project/convert/mini_test/mini_model.py:477:0

%11 : Float(1, 5, 5, 5) = onnx::Conv[dilations=[1, 1], group=1, kernel_shape=[3, 3], pads=[1, 1, 1, 1], strides=[1, 1]](%10, %m2.weight) # /home/seohee/.local/lib/python3.5/site-packages/torch/nn/modules/conv.py:342:0

return (%11)

이를 TensorRT 로 변환하는 과정의 핵심 부분은 다음과 같다.

...

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Transpose]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 5

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Transpose] inputs: [5 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 2) [Shuffle] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 6 for ONNX tensor: 6

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Transpose] outputs: [6 -> (1, 1, 5, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Conv]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 6

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: eca.conv.weight

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Conv] inputs: [6 -> (1, 1, 5, 1)], [eca.conv.weight -> (1, 1, 3, 1)],

[TensorRT] VERBOSE: builtin_op_importers.cpp:450: Convolution input dimensions: (1, 1, 5, 1)

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 3) [Convolution] for ONNX node:

[TensorRT] VERBOSE: builtin_op_importers.cpp:533: Using kernel: (3, 1), strides: (1, 1), prepadding: (1, 0), postpadding: (1, 0), dilations: (1, 1), numOutputs: 1

[TensorRT] VERBOSE: builtin_op_importers.cpp:534: Convolution output dimensions: (1, 1, 5, 1)

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 7 for ONNX tensor: 7

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Conv] outputs: [7 -> (1, 1, 5, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Transpose]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 7

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Transpose] inputs: [7 -> (1, 1, 5, 1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 4) [Shuffle] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 8 for ONNX tensor: 8

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Transpose] outputs: [8 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Sigmoid]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 8

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Sigmoid] inputs: [8 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 5) [Activation] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 9 for ONNX tensor: 9

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Sigmoid] outputs: [9 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Mul]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 4

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 9

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Mul] inputs: [4 -> (1, 5, 5, 5)], [9 -> (1, 5, 1, 1)],

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 6) [ElementWise] for ONNX node:

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 10 for ONNX tensor: 10

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Mul] outputs: [10 -> (1, 5, 5, 5)],

[TensorRT] VERBOSE: ModelImporter.cpp:103: Parsing node: [Conv]

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: 10

[TensorRT] VERBOSE: ModelImporter.cpp:119: Searching for input: m2.weight

[TensorRT] VERBOSE: ModelImporter.cpp:125: [Conv] inputs: [10 -> (1, 5, 5, 5)], [m2.weight -> (5, 5, 3, 3)],

[TensorRT] VERBOSE: builtin_op_importers.cpp:450: Convolution input dimensions: (1, 5, 5, 5)

[TensorRT] VERBOSE: ImporterContext.hpp:141: Registering layer: (Unnamed Layer* 7) [Convolution] for ONNX node:

[TensorRT] VERBOSE: builtin_op_importers.cpp:533: Using kernel: (3, 3), strides: (1, 1), prepadding: (1, 1), postpadding: (1, 1), dilations: (1, 1), numOutputs: 5

[TensorRT] VERBOSE: builtin_op_importers.cpp:534: Convolution output dimensions: (1, 5, 5, 5)

[TensorRT] VERBOSE: ImporterContext.hpp:116: Registering tensor: 11_1 for ONNX tensor: 11

[TensorRT] VERBOSE: ModelImporter.cpp:179: [Conv] outputs: [11 -> (1, 5, 5, 5)],

[TensorRT] VERBOSE: ModelImporter.cpp:507: Marking 11_1 as output: 11

...

생성된 TensorRT engine 파일을 Debugging 해보면 아래와 같이 Output Shape 이 명시적으로 1로 고정되어 있음을 확인 할 수 있다.

2020-09-16 14:05:41 - __main__ - DEBUG - === Network Description ===

2020-09-16 14:05:41 - __main__ - DEBUG - Input 0 | Name: input.1 | Shape: (1, 3, 5, 5)

2020-09-16 14:05:41 - __main__ - DEBUG - Output 0 | Name: 11 | Shape: (1, 5, 5, 5)

결론은 TensorRT 7.x 버전 부터 ONNX model parsing 할 때 dynamic batch size 를 지원한다고 하는데, 이는 맨 처음 input 에 한하여 지원하는 듯 하다. 가령 (-1, 3, 224, 224) 이런 식의 입력 값을 줄 때 배치 사이즈에 대하여 최소, 최대의 값을 지정하도록 하는 profile 과정이 필요하다. 이를 고려하여 최대한 최적화 하려는 TensorRT 의 최적화 방향 같다.

하지만 위와 같이, 처음 input 값이 아닌 중간에 가변적인 값(e.g. -1)이 들어왔을 때는 이를 충분히 고려하지 못하여 최적화를 수행하기 때문에 이와 같은 예외를 내뱉어 내는 것 같다.

(2020.09.16) 추가 사항

이와 관련된 설명이 TensorRT Document 에 나와있는 듯 싶다. 7.0 버전을 바로 사용했을 땐 이런 설명이 없었던 것 같은데 추가 된 듯 하다.

아래 링크를 확인하면 될 듯하다.

7.5. Execution Tensors vs. Shape Tensors

TensorRT Developer Guide :: NVIDIA Deep Learning SDK Documentation

To optimize your model for inference, TensorRT takes your network definition, performs optimizations including platform-specific optimizations, and generates the inference engine. This process is referred to as the build phase. The build phase can take con

docs.nvidia.com

핵심적인 내용은 아래와 같다.

Engines using dynamic shapes employ a two-phase execution strategy.

- Compute the shapes of all tensors

- Stream work to the GPU.

Phase 1 is implicit and driven by demand, such as when output dimensions are requested. Phase 2 is the same as in prior versions of TensorRT. The two phase execution puts some limits on dynamism that are important to understand.

The key limits are:

- The rank of a tensor must be determinable at build time.

- A tensor is either an execution tensor, shape tensor, or both. Tensors classified as shape tensors are subject to limits discussed below.

An execution tensor is a traditional TensorRT tensor. A shape tensor is a tensor that is related to shape calculations.

It must be 0D or 1D, have type Int32, and its shape must be determinable at build time.

For example, there is an IShapeLayer whose output is a 1D tensor containing the dimensions of the input tensor.

The output is a shape tensor. IShuffleLayer accepts an optional second input that can specify reshaping dimensions.

The second input must be a shape tensor.

Some layers are “polymorphic” with respect to the kinds of tensors they handle.

For example, IElementWiseLayer can sum two INT32 execution tensors or sum two INT32 shape tensors.

The type of tensor depends on their ultimate use.

If the sum is used to reshape another tensor, then it is a “shape tensor”.

'Development & Tools > Frameworks & Libraries' 카테고리의 다른 글

| [DeepStream] GTC 2020, Certification (Deep Learning for Intelligent Video Analytics) (0) | 2021.01.22 |

|---|---|

| [TensorRT] Implicit vs Explicit (4) | 2020.10.21 |

| [TensorRT] ONNX 에서 TensorRT 변환 시 Upsample scale_factor 문제 (0) | 2020.09.03 |

| [ONNX] Pytorch 모델을 ONNX 모델로 변환하기 (6) | 2020.08.23 |

| [TensorRT] TRT_LOGGER 이용해서 로그 확인하기 (0) | 2020.08.03 |