[GPU] GPU Performance 및 Titan V, RTX 2080 Ti Benchmark

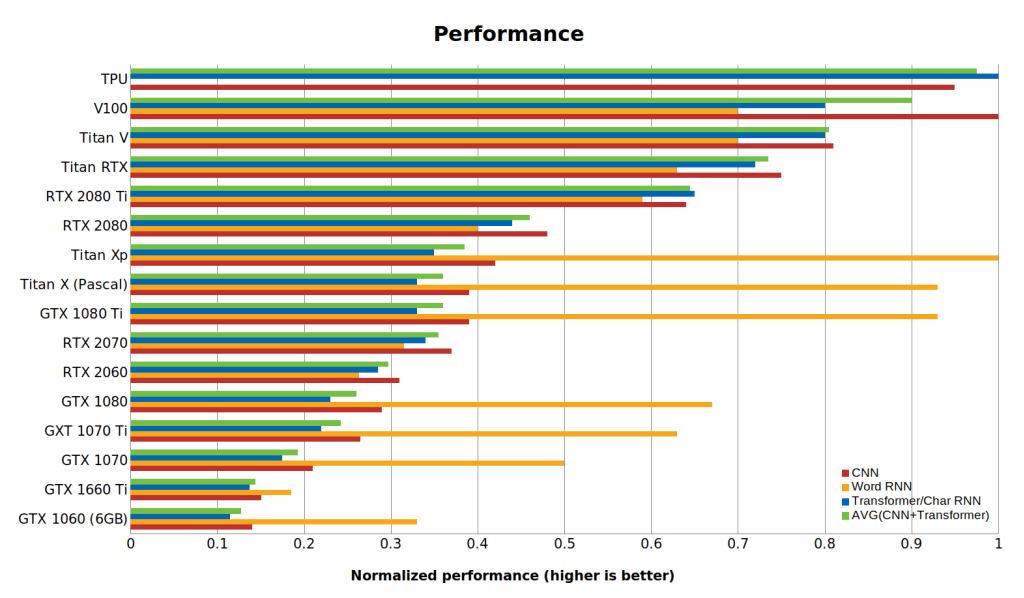

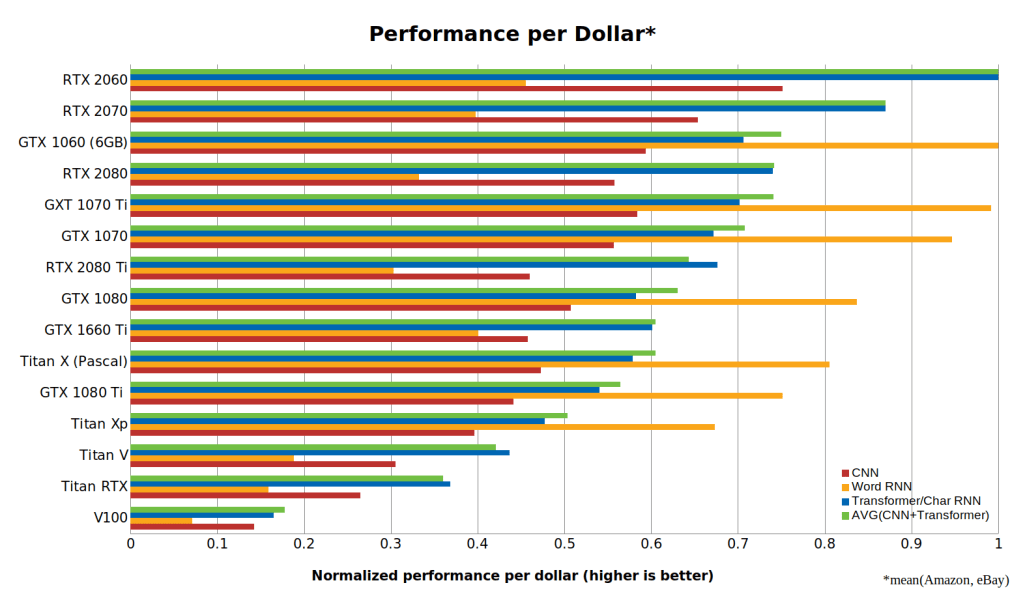

TPU

V100

TiTan V

Titan RTX

RTX 2080 Ti

RTX 2080

Titan Xp

Titan X

GTX 1080 Ti

RTX 2070

RTX 2060

GTX 1080

GTX 1070 Ti

GTX 1070

GTX 1660 Ti

GTX 1060

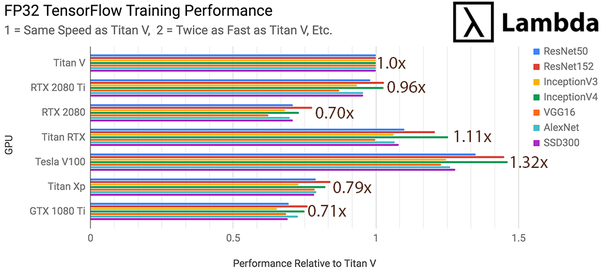

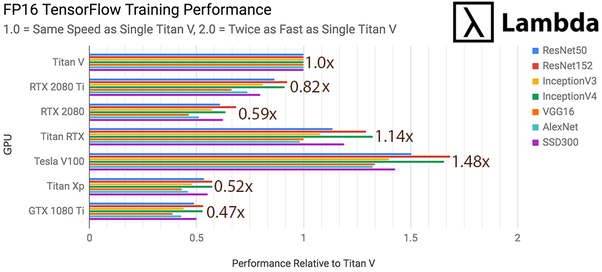

Titan V Benchmark

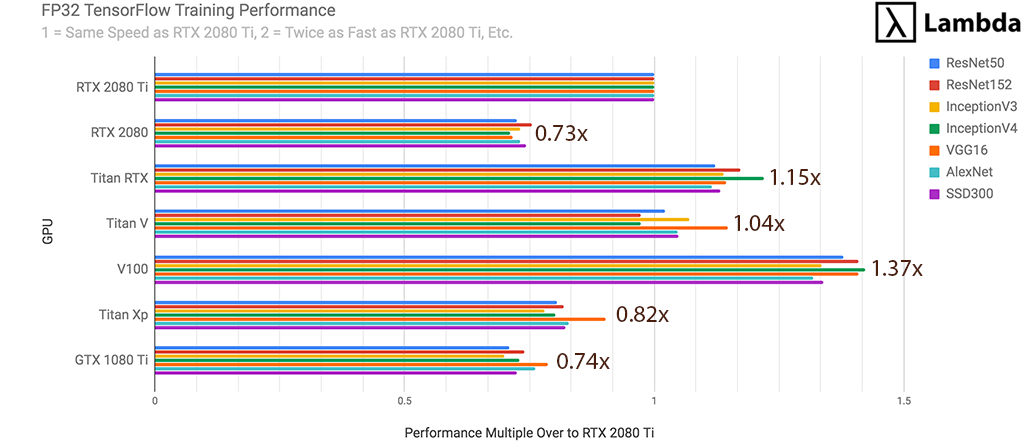

FP32 TensorFlow Training Performance

FP16 TensorFlow Training Performance

RTX 2080 Ti Benchmark

RTX 2080 Ti - FP32 TensorFlow Performance (1 GPU)

For FP32 training of neural networks, the RTX 2080 Ti is...

- 37% faster than RTX 2080

- 35% faster than GTX 1080 Ti

- 22% faster than Titan XP

- 96% as fast as Titan V

- 87% as fast as Titan RTX

- 73% as fast as Tesla V100 (32 GB)

as measured by the # images processed per second during training.

RTX 2080 Ti - FP16 TensorFlow Performance (1 GPU)

For FP16 training of neural networks, the RTX 2080 Ti is..

- 72% faster than GTX 1080 Ti

- 59% faster than Titan XP

- 32% faster than RTX 2080

- 81% as fast as Titan V

- 71% as fast as Titan RTX

- 55% as fast as Tesla V100 (32 GB)

as measured by the # images processed per second during training.

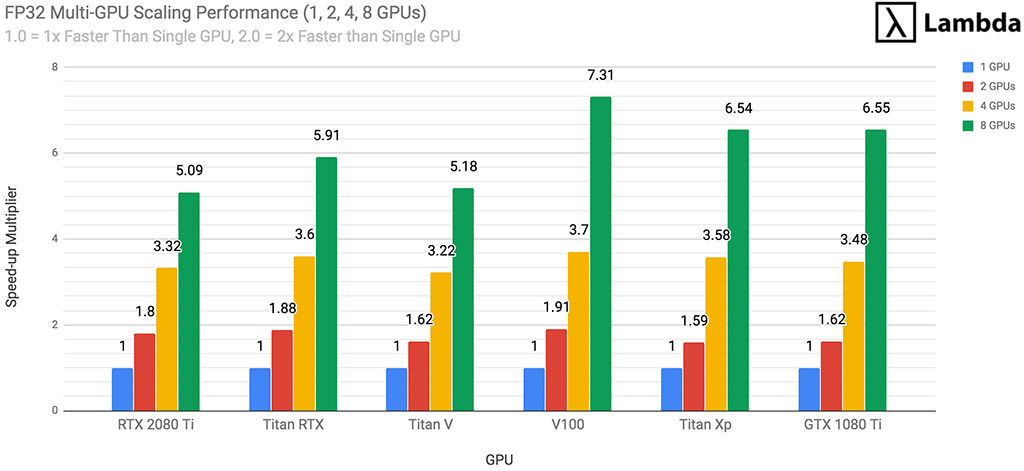

FP32 Multi-GPU Scaling Performance (1, 2, 4, 8 GPUs)

For each GPU type (RTX 2080 Ti, RTX 2080, etc.) we measured performance while training with 1, 2, 4, and 8 GPUs on each neural networks and then averaged the results. The chart below provides guidance as to how each GPU scales during multi-GPU training of neural networks in FP32. The RTX 2080 Ti scales as follows:

- 2x RTX 2080 Ti GPUs will train ~1.8x faster than 1x RTX 2080 Ti

- 4x RTX 2080 Ti GPUs will train ~3.3x faster than 1x RTX 2080 Ti

- 8x RTX 2080 Ti GPUs will train ~5.1x faster than 1x RTX 2080 Ti

RTX 2080 Ti - FP16 vs. FP32

Using FP16 can reduce training times and enable larger batch sizes/models without significantly impacting the accuracy of the trained model. Compared with FP32, FP16 training on the RTX 2080 Ti is...

- 59% faster on ResNet-50

- 52% faster on ResNet-152

- 47% faster on Inception v3

- 34% faster on Inception v4

- 50% faster on VGG-16

- 38% faster on AlexNet

- 31% faster on SSD300

as measured by the # of images processed per second during training. This gives an average speed-up of +44.6%.

Caveat emptor: If you're new to machine learning or simply testing code, we recommend using FP32. Lowering precision to FP16 may interfere with convergence.

GPU Prices

- RTX 2080 Ti: $1,199.00

- RTX 2080: $799.00

- Titan RTX: $2,499.00

- Titan V: $2,999.00

- Tesla V100 (32 GB): ~$8,200.00

- GTX 1080 Ti: $699.00

- Titan Xp: $1,200.00

참고자료 1 : https://timdettmers.com/2019/04/03/which-gpu-for-deep-learning/

Which GPU(s) to Get for Deep Learning

You want a cheap high performance GPU for deep learning? In this blog post I will guide through the choices, so you can find the GPU which is best for you.

timdettmers.com

참고자료 2 : https://www.quora.com/Is-the-Titan-X-better-than-the-1080-ti-for-deep-learning-and-if-so-why

Is the Titan X better than the 1080 ti for deep learning, and if so, why?

Answer (1 of 5): NVIDIA made chips 2 architectures. One is GP100 and other GP102. GP102 powers the Geforce cards and GP100 powers the Tesla cards. GP100 have same FP32 performance as GP102. By the way, GP102 is TX, 1080Ti the rest of the geforce line up us

www.quora.com

참고자료 3 : https://lambdalabs.com/blog/2080-ti-deep-learning-benchmarks/

RTX 2080 Ti Deep Learning Benchmarks with TensorFlow - 2019

TensorFlow Benchmarks | RTX 2080 Ti | RTX 2080 | Titan RTX | Tesla V100 | GTX 1080 Ti | Titan Xp.

lambdalabs.com

'Development & Tools > Hardware & Acceleration' 카테고리의 다른 글

| [CUDA] CUDA Driver Version (0) | 2020.07.06 |

|---|---|

| [PyCUDA] 정리중 (0) | 2020.05.14 |

| [CUDA] PyCUDA documentation (0) | 2019.08.04 |

| [CUDA] CUDA Capability 확인 (2) | 2019.05.31 |

| [CUDA] Visual Studio 2015, OpenCV , CUDA 연동하기 (3) | 2017.01.25 |